About Me

I am a tenure-track Assistant Professor and PI at Westlake University, where I lead the AGI Lab. Before joining Westlake University, I worked as a scientist at Tencent.

I obtained my Ph.D. degree at the School of Computer Science and Engineering, Nanyang Technological University (NTU), Singapore, where I worked under the supervision of Prof. Guosheng Lin. I also work closely with Prof. Chunhua Shen and Prof. Rui Yao in research. I was recognized among World's Top 2% Scientists by Stanford University in 2023 and 2024.

Research Interests

My current research focuses on Generative AI, including theoretical foundations of generative models, multimodal generative modeling, and multimodal intelligent agents. In the past, my work has also spanned broader areas in machine learning and computer vision.

News

Scroll for moreAcademic Service

- Associate Editor for TCSVT since 2024

- Area Chair for ICML 2026, ICLR 2026, CVPR 2026, ACL ARR 2025, ACM Multimedia 2025, IJCNN 2025

- Reviewer for T-PAMI, ICLR, CVPR, NeurIPS, etc.

Hobbies

I like singing and playing football. I am a loyal fan of FC Barcelona PSG Inter Miami .

My favorite singers are Jacky Cheung and Freddie Mercury.

Selected Projects

MeshAnything: Artist-Created Mesh Generation with Autoregressive Transformers

ICLR 2025

MeshAnything V2: Artist-Created Mesh Generation with Adjacent Mesh Tokenization

ICCV 2025

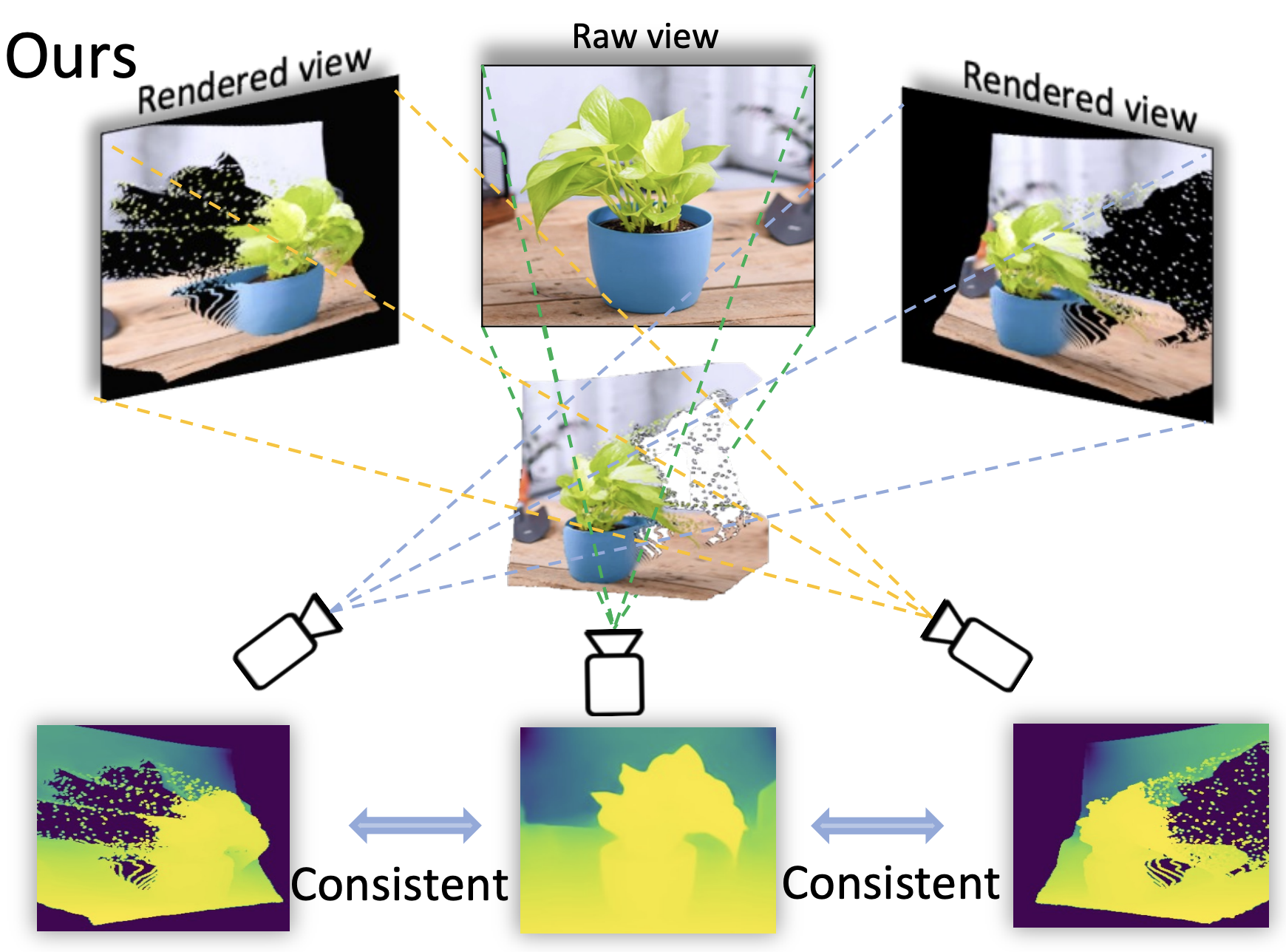

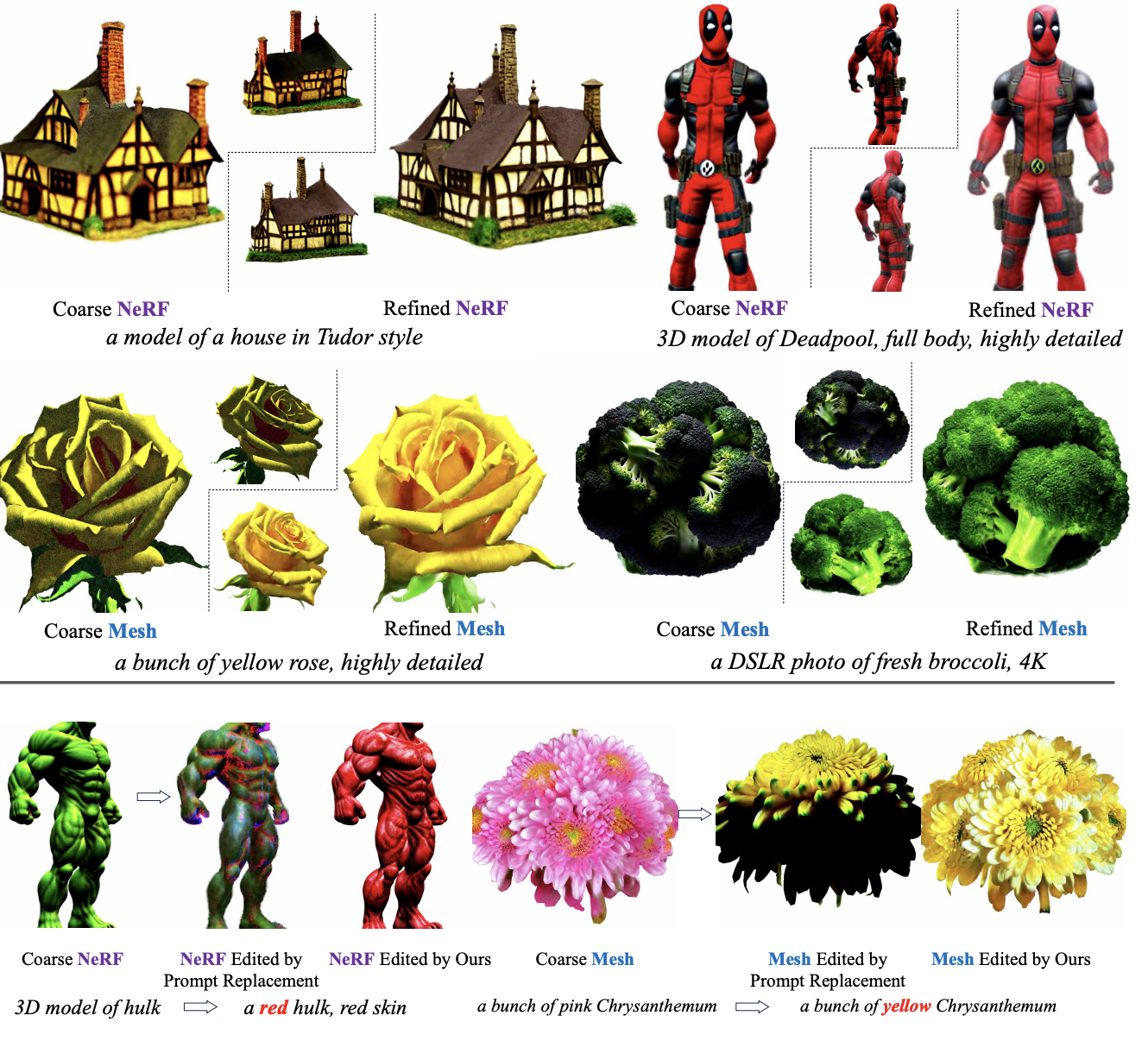

GaussianEditor: Swift and Controllable 3D Editing with Gaussian Splatting

CVPR 2024

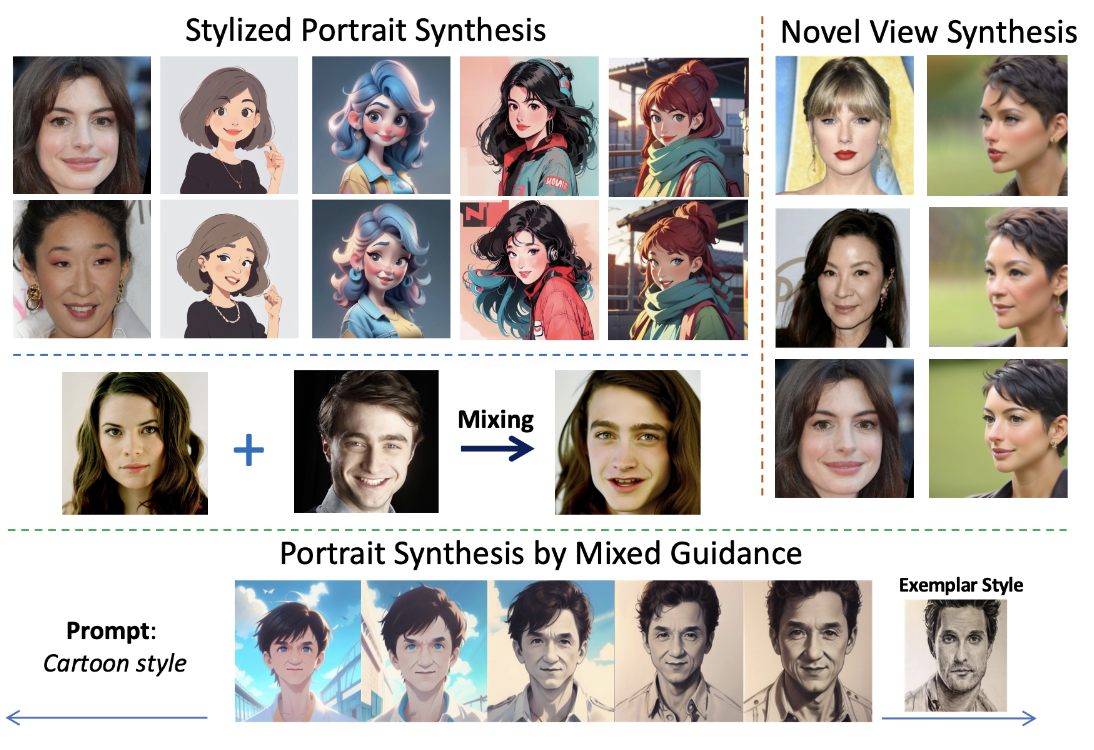

StyleStudio: Text-Driven Style Transfer with Selective Control of Style Elements

CVPR 2025

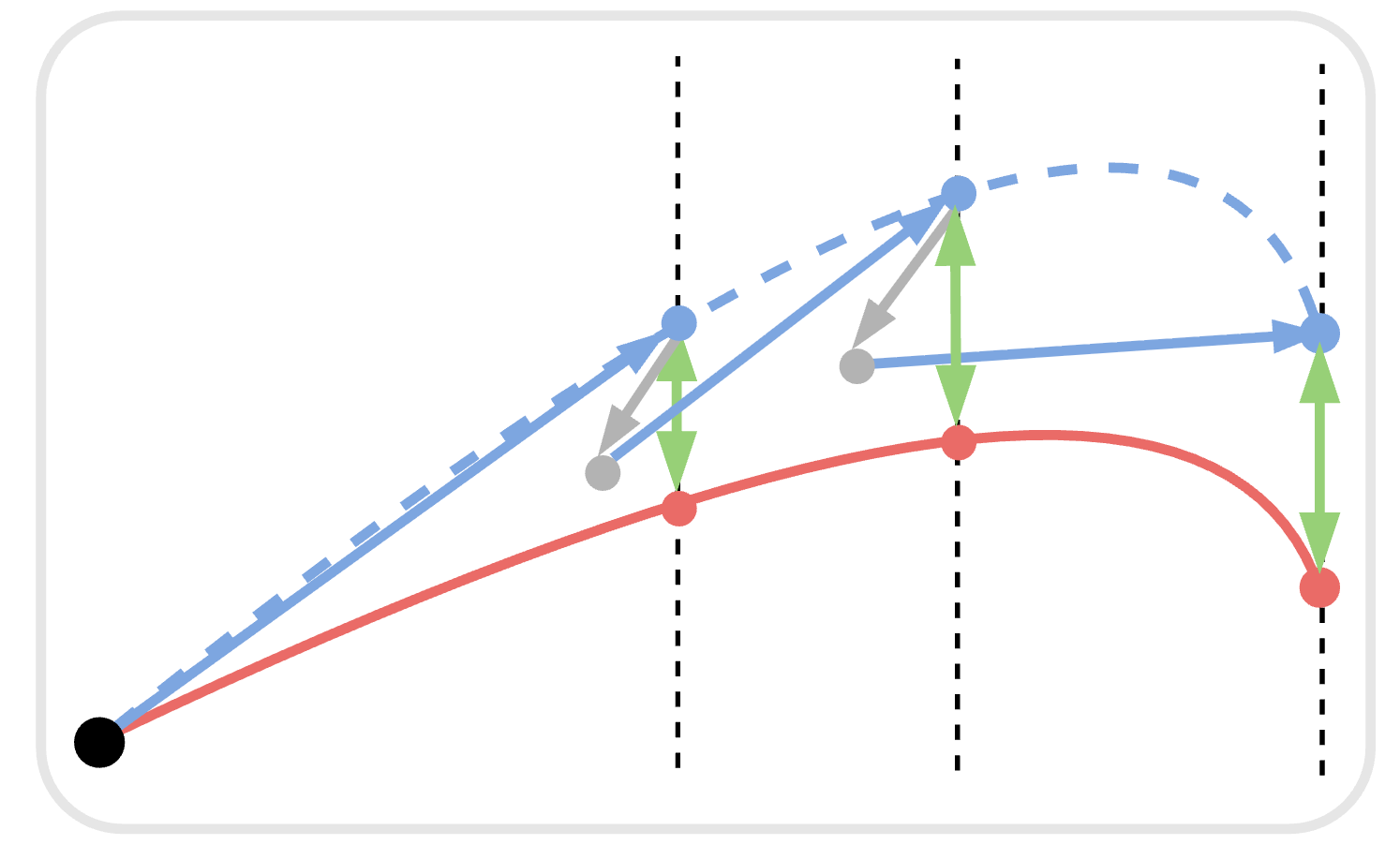

WorldForge: Unlocking Emergent 3D/4D Generation in Video Diffusion Model via Training-Free Guidance

Arxiv 2025

FlowDirector: Training-Free Flow Steering for Precise Text-to-Video Editing

Arxiv 2025

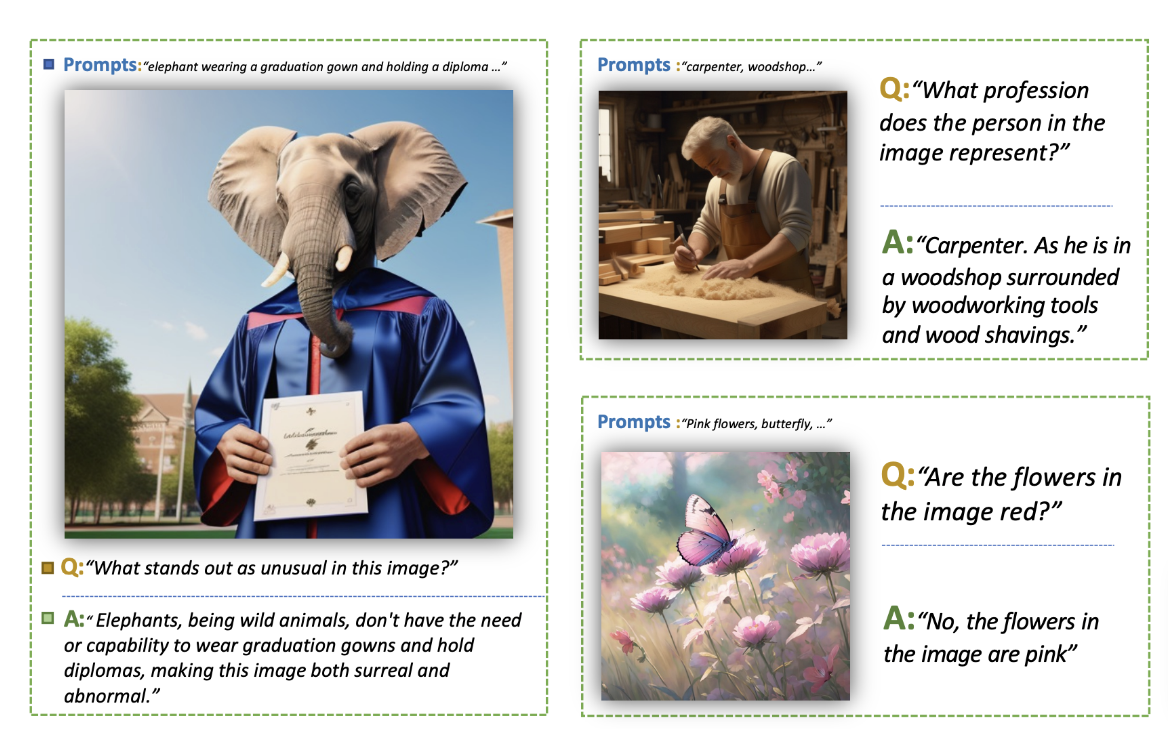

StableLLaVA: Enhanced Visual Instruction Tuning with Synthesized Image-Dialogue Data

ACL 2024

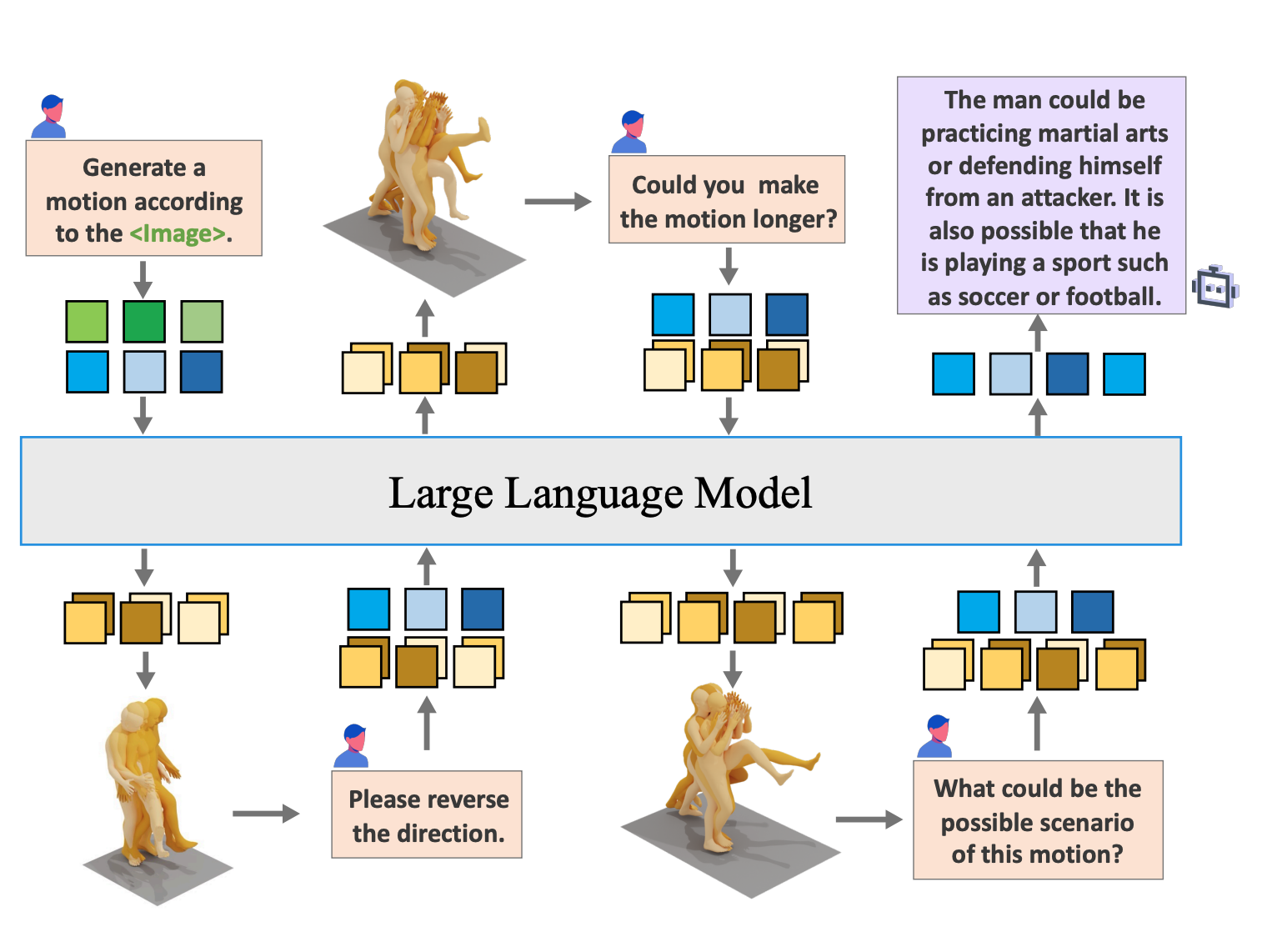

MotionChain: Conversational Motion Controllers via Multimodal Prompts

ECCV 2024

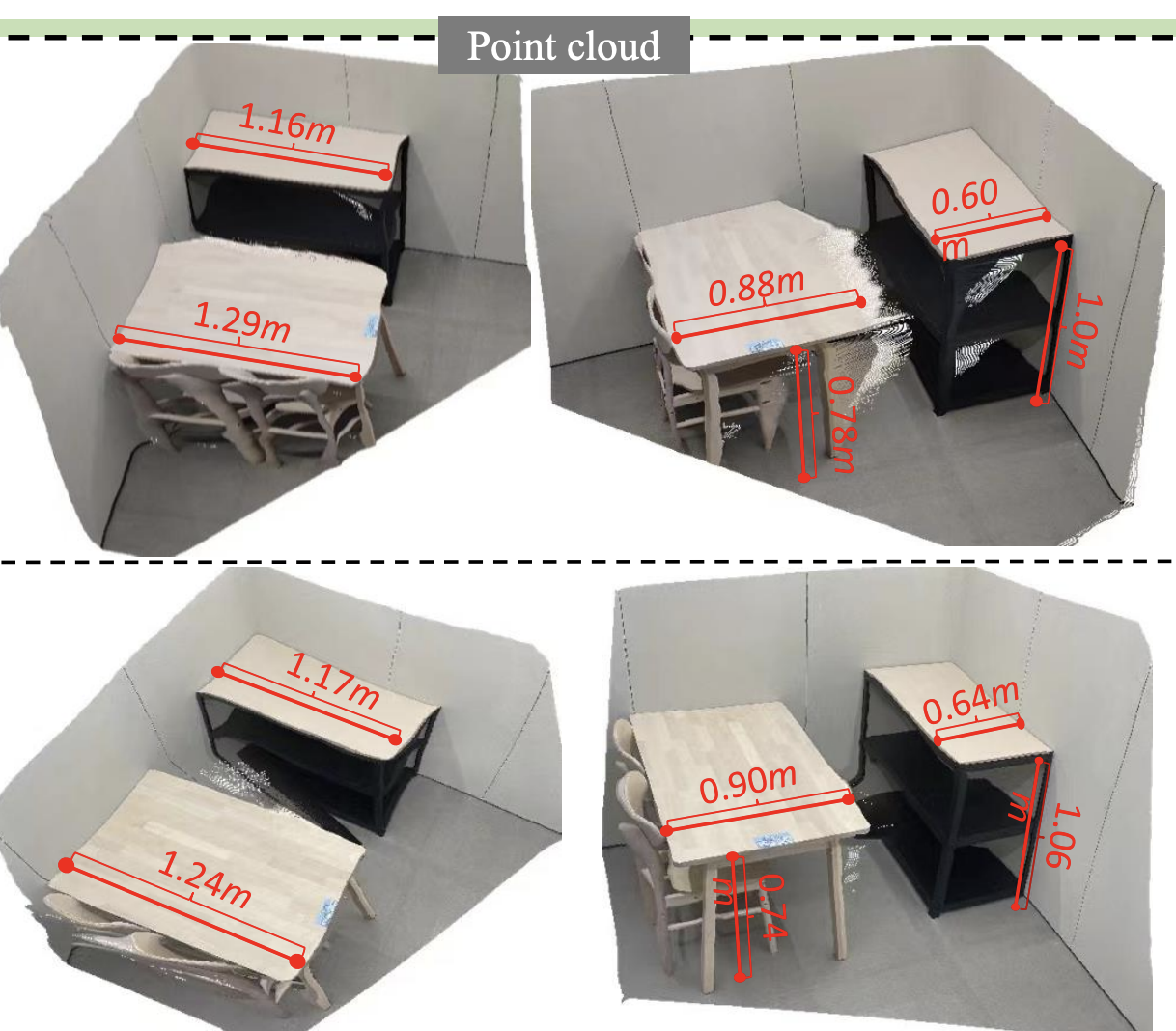

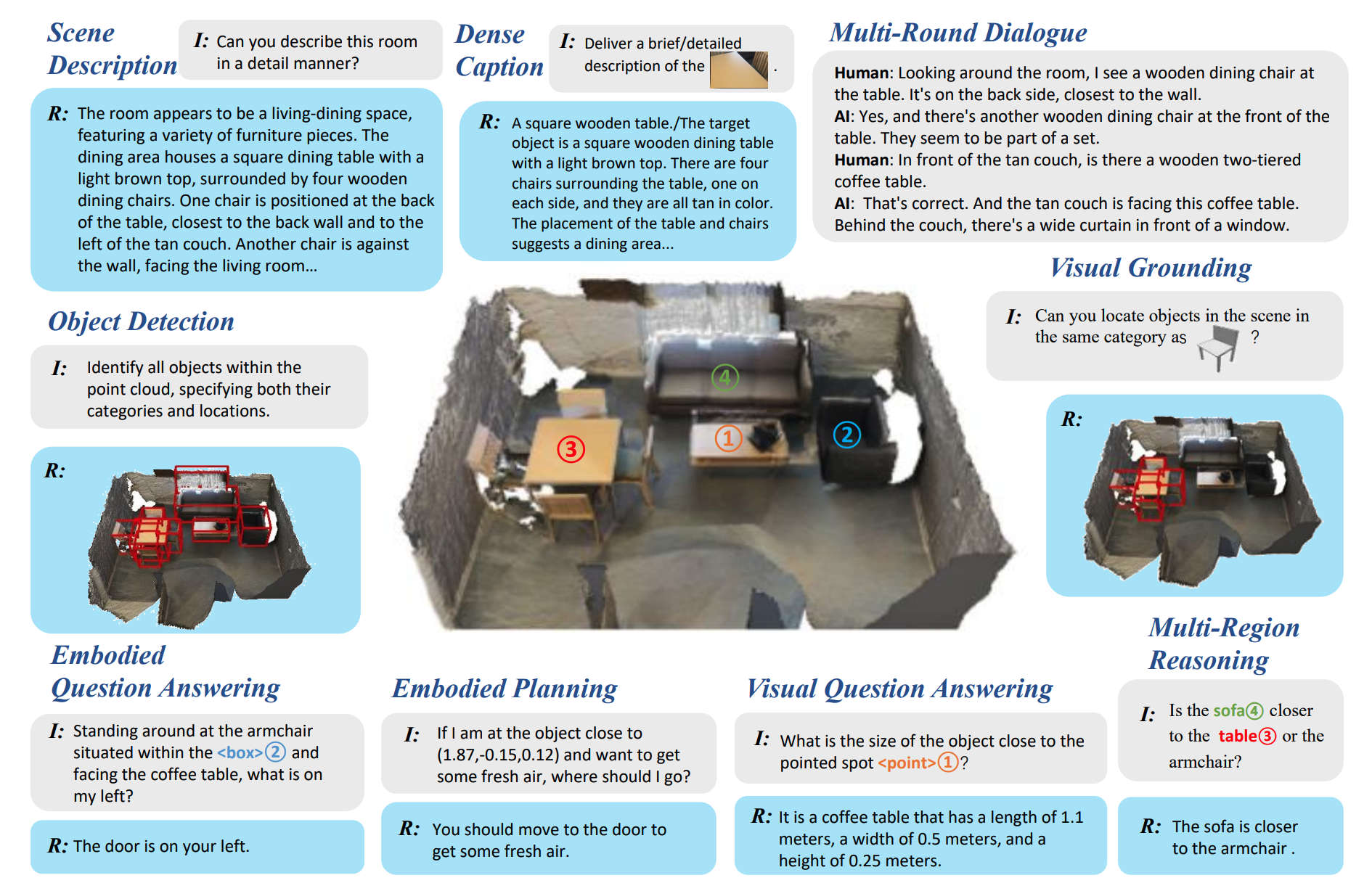

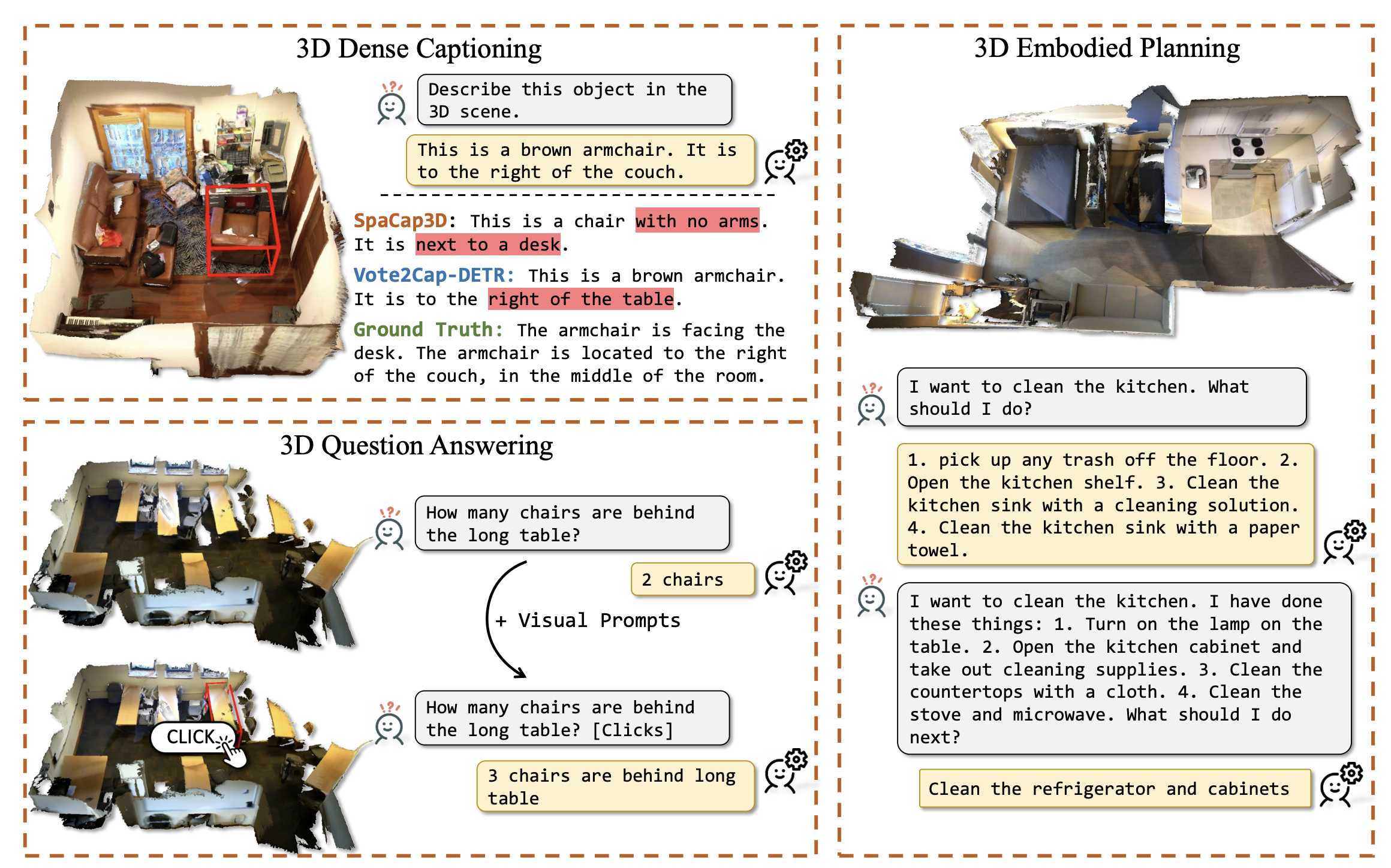

LL3DA: Visual Interactive Instruction Tuning for Omni-3D Understanding, Reasoning, and Planning.

CVPR 2024

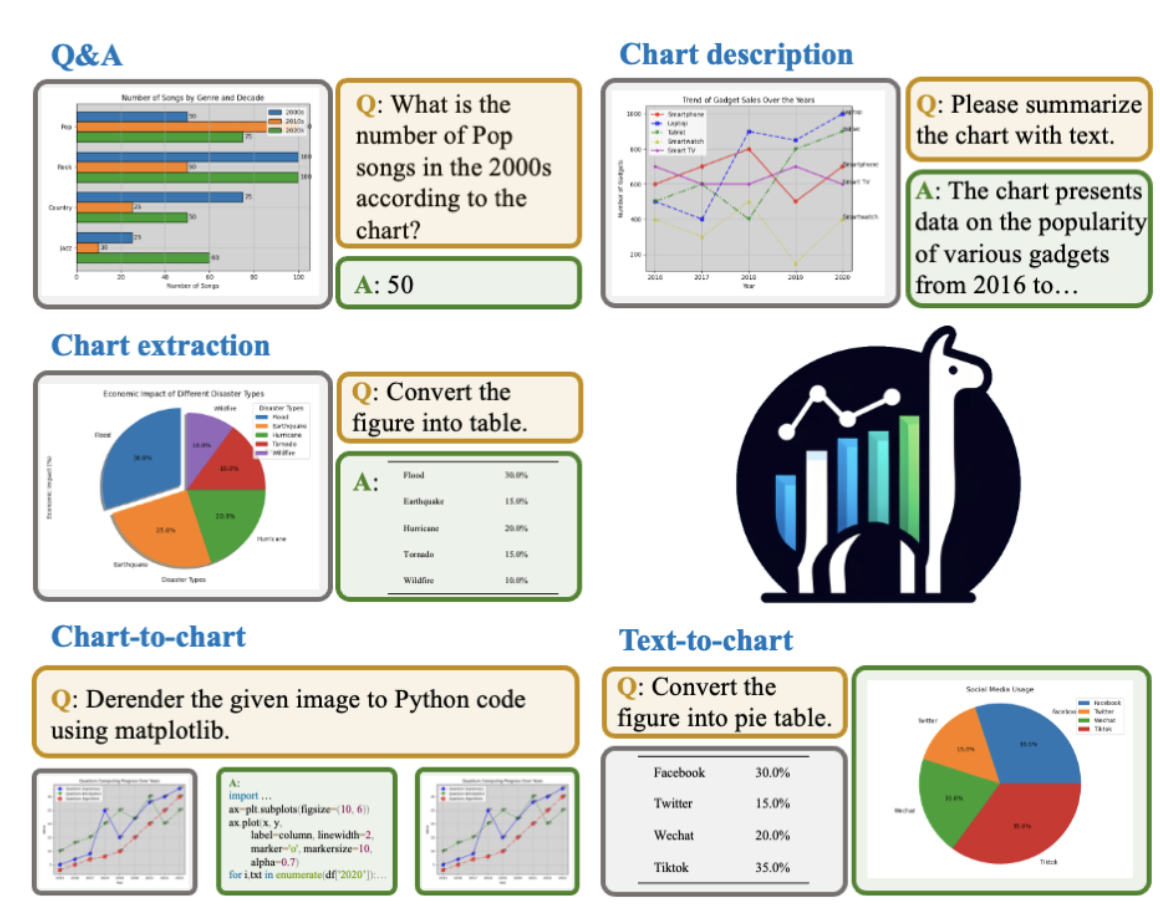

ChartLlama: A Multimodal LLM for Chart Understanding and Generation

Arxiv 2023

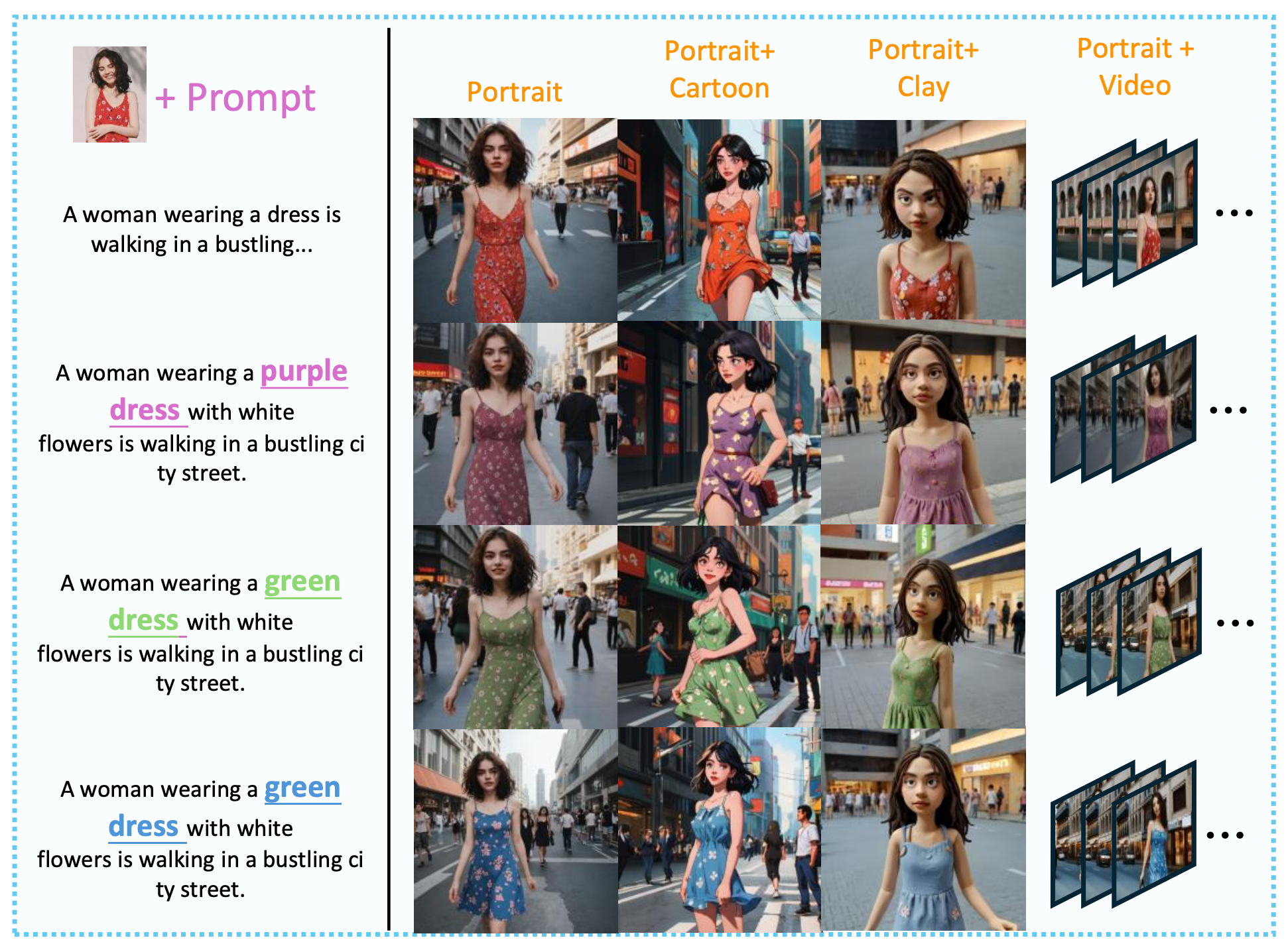

EMMA: Your Text-to-Image Diffusion Model Can Secretly Accept Multi-Modal Prompts

Arxiv 2024